Accessibility assessment

An extensive accessibility audit of two financial technology products for WCAG (Web Content Accessibility Guidelines) AA compliance.

Responsibilities

Performed audit

Created and designed report

Presented to client

Team

Audit: Myself and full-stack engineer

Management: Project manager and experience director, part-time

Report: Myself, UX designer to help with visuals, and experience director who assisted with content for the report

Duration

May 2018 (two weeks)

Overview

The problem

Our client came to us to review two of their most popular web products for providing quick online credit solutions. They needed WCAG assessments to evaluate and identify accessibility standards that were not being met.

Our solution

We needed to understand the client’s goals of the audit since there are varying degrees of compliance. The client’s goal: “We don’t want a lawsuit.” Based on that, we provided experience and technology recommendations to satisfy AA standards.

Project background

This was the first time that our team had worked on an accessibility assessment so there was a big learning curve for everyone, myself included. The deliverables promised to the client were an experience design assessment and a technology assessment for each online credit application.

Once we began to dig into the products and ramped up on WCAG compliance, we quickly realized that there was a lot more to it than we initially thought. More on that later.

The audit process

1. Scanning tool

Used a browser extension that automatically tested the code for violations.

2. Manual code analysis

Examined the HTML for the technical criteria that the scanning tool can’t find.

3. Keyboard testing

Every action on each page is attempted using only the keyboard.

4. Screen reader testing

Each page was analyzed with a text to speech screen reader.

5. Manual color analysis

Analyzed the color contrast of elements that the scanning tool missed.

1. Scanning tool

One of our early misconceptions was that an automatic scanning tool would be able to find many of the violations for us. Although that wasn’t the case, it still provided a start. We relied on a powerful tool called axe DevTools, a Chrome browser extension. The tool identified 51 different violations automatically.

The tool also provides thorough documentation on all the accessibility rules it checks for. We leveraged a lot of this content for our report, including their user impact scale.

2. Manual code analysis

The rest of the WCAG success criteria required a manual check. Another misconception our team had was that there was going to be a pretty even split between technical and design-based accessibility rules. But the vast majority are related to HTML markup. To get the audit done in the amount of time that had been estimated, we needed to divide and conquer. I had to get ramped up quickly on coding conventions. Fortunately, I could rely on my teammate’s engineering knowledge for a lot of help.

3. Keyboard testing

Every action on each page was attempted using only the keyboard. There was a big learning curve here to figure out the keystrokes to navigate the entire flow. We gained a huge amount of respect for those who rely on keyboards to navigate a webpage.

Inaccessible elements and awkward interactions were called out explicitly. We found a lot of global issues around a lack of focus indicators, a confusing navigation order, and even a few controls that were not accessible by keyboard at all.

4. Screen reader testing

Each page was analyzed using speech with the Mac VoiceOver screen reader. It took us a lot of practice to get used to the high speed that the screen reader converted the content.

5. Manual color analysis

The scanning tool we used runs a contrast check to find text versus background violations. But it’s unable to test the color contrast of focus and hover states, as well as the contrast between links and body copy so we assessed those manually.

We also provided accessible color combinations using updated shades and tints.

The deliverables

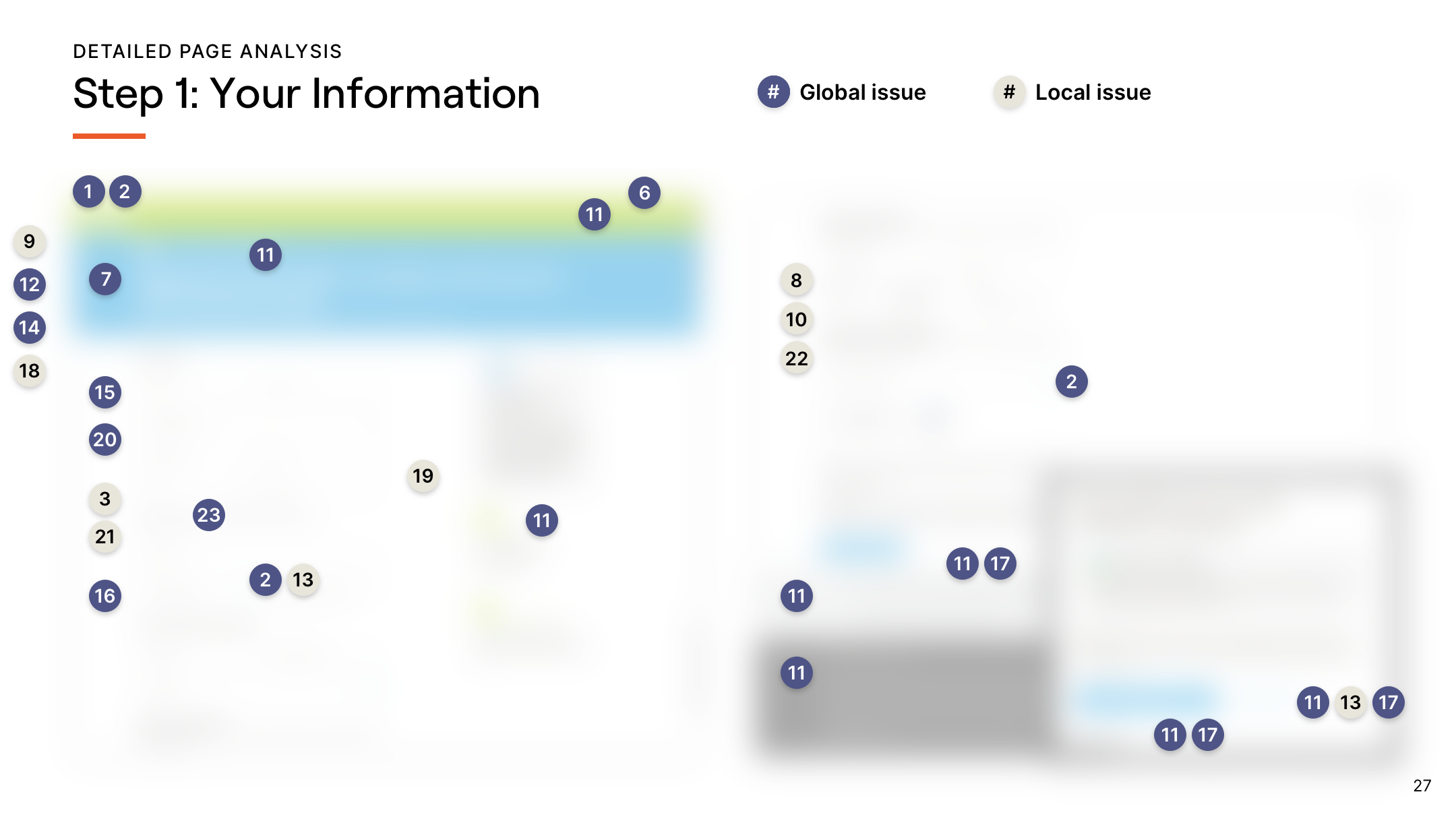

Detailed analysis

Here’s a sample of a few of the slides from the report. Client’s name, product names, and some of the product screenshots have been redacted.

List of violations

After we finished presenting the Keynote, one of the first things a stakeholder said was, “See, this is what I hate about agencies. They present these big, beautiful decks, when what we really need is a spreadsheet.” Rightfully so, the client pointed out that a spreadsheet listing all of the violations would’ve sufficed so that the product owner could copy and paste each violation as its own story into their backlog for prioritization.

With that feedback, we quickly provided the client with just that so that they could get to working on resolving these accessibility issues.

Lessons learned

A much larger scope than estimated

The client asked us to assess two of their most popular products, both online applications for lines of credit and installment loans. At first glance, one application was only three pages of forms and the other was seven pages. But once we started digging into the experience, we learned that there were actually an additional combined 10 pages from both application flows that would require auditing.

Evaluating web accessibility isn’t so black-and-white

Our team mischaracterized WCAG to be a simple checklist of objective pass or fail tests. But reading the guidance for each of the 38 success criteria revealed that it wasn’t so black-and-white. There can be a wide range of interpretation for many of of the criteria with varying degrees of sufficiencies to meet them.

Automated scanning tools only get you a small part of the way

We knew that there were several accessibility automated scanning tools available and naively believed that these tools would be able to catch the majority of the violations for us. Of course, that’s not the case at all and in fact can only capture a very small sliver of the experience.

The majority of guidelines are technical, not design-based

There was an assumption that the success criteria would be about a 50/50 split between technology and experience. In reality, at least 90% of them are technical and relate to HTML markup.

There’s a lot of content to put together for a thorough report

We estimated that it would take just 2–3 days to create the report itself. But because WCAG is not written in simple terms, it took us five days to write the content of the report in a way that was understandable. And because we found so many violations, there was a lot of content to create and screenshots to capture.